OpenAI Releases DALL-E 3: Pushing the Limits in Detail and Prompt Fidelity

OpenAI has released its latest AI image generator, DALL-E 3, which promises to push the boundaries in terms of detail and prompt fidelity. This new model, fully integrated with ChatGPT, aims to render images by closely following complex descriptions and handling in-image text generation.

The improved response to details and text means that prompt engineering may become obsolete, as DALL-E 3 creates engaging and faithful images by default, without the need for any additional techniques.

The research preview of DALL-E 3 is set to be available to ChatGPT Plus and Enterprise customers in early October, offering a radically more capable image-synthesis model than previous versions.

1. Introduction

Welcome to the world of advanced AI image generation! In a groundbreaking announcement, OpenAI has unveiled DALL-E 3, the latest version of its AI image-synthesis model. This new release boasts impressive capabilities, including enhanced detail rendering and improved prompt fidelity. With an integration with ChatGPT, OpenAI aims to make prompt engineering a thing of the past. In this article, we will dive into the features, availability, training techniques, and comparisons of DALL-E 3 to previous versions. Get ready to be amazed by OpenAI’s advancements in AI image generation!

2. OpenAI Releases DALL-E 3

OpenAI’s DALL-E 3 brings a new level of sophistication to AI image generation. With its integration with ChatGPT, this powerful model pushes the boundaries of what is possible in the world of AI-generated images. Let’s explore some of the key aspects of this release:

2.1 Integration with ChatGPT

One of the most exciting aspects of DALL-E 3 is its seamless integration with ChatGPT. This means that ChatGPT users will now have access to the advanced image generation capabilities of DALL-E 3. The AI assistant will become a brainstorming partner, allowing for conversational refinements to images. By generating images based on the context of the ongoing conversation, OpenAI opens up new possibilities for creative expression.

2.2 Availability

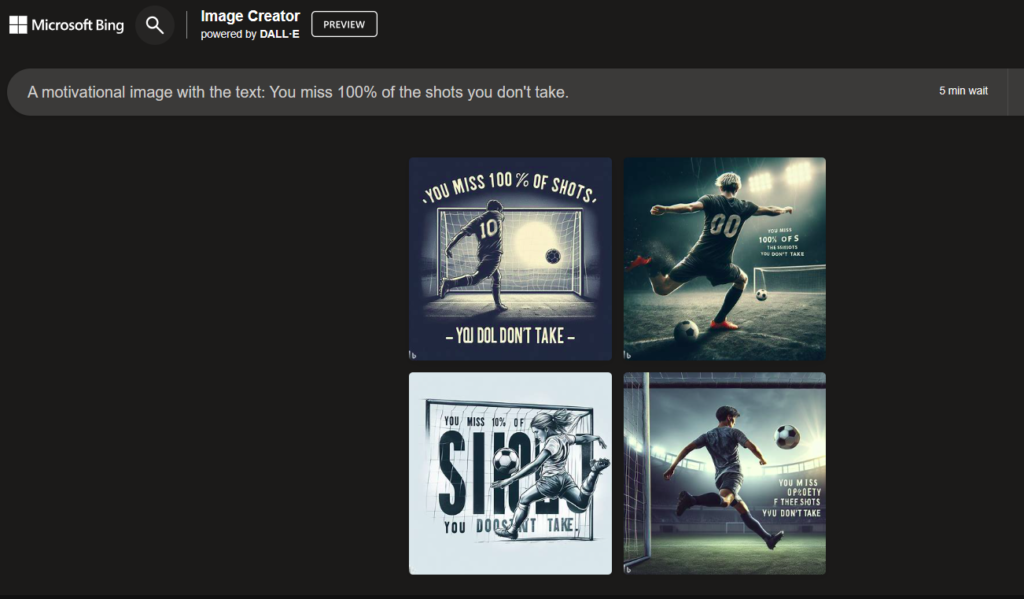

While DALL-E 3 is currently in research preview, OpenAI plans to make it available to ChatGPT Plus and Enterprise customers in early October. This eagerly anticipated release will give users the opportunity to harness the power of DALL-E 3 and create stunning AI-generated images. There is also a free period to use DALL-E 3 in Microsoft Bing image generator.

2.3 Training Techniques

Though OpenAI has not disclosed the technical details of DALL-E 3, it is likely that the model follows a similar training process to its predecessors. Previous versions of DALL-E were trained on millions of images created by human artists and photographers, some of which were sourced from stock websites. DALL-E 3 is expected to incorporate new training techniques and benefit from increased computational training time, resulting in even more impressive image synthesis capabilities.

2.4 Comparison to Previous Versions

In terms of following prompts, DALL-E 3 surpasses its predecessors by a wide margin. OpenAI’s cherry-picked examples demonstrate the faithful execution of prompt instructions and the convincing rendering of objects with minimal deformations. Compared to DALL-E 2, DALL-E 3 excels at refining small details, such as hands, without the need for additional prompt engineering. OpenAI has truly raised the bar with this latest release, setting a new standard for AI-generated images.

3. Pushing the Limits in Detail

DALL-E 3 pushes the limits of AI image generation by producing highly detailed and realistic images. Let’s explore some of the remarkable capabilities of this cutting-edge model:

3.1 Rendering Complex Descriptions

DALL-E 3 excels at accurately rendering complex descriptions. The model closely follows the intricate details provided in the prompts, resulting in images that faithfully represent the desired concepts. This ability to understand and incorporate nuanced instructions sets DALL-E 3 apart from its competitors.

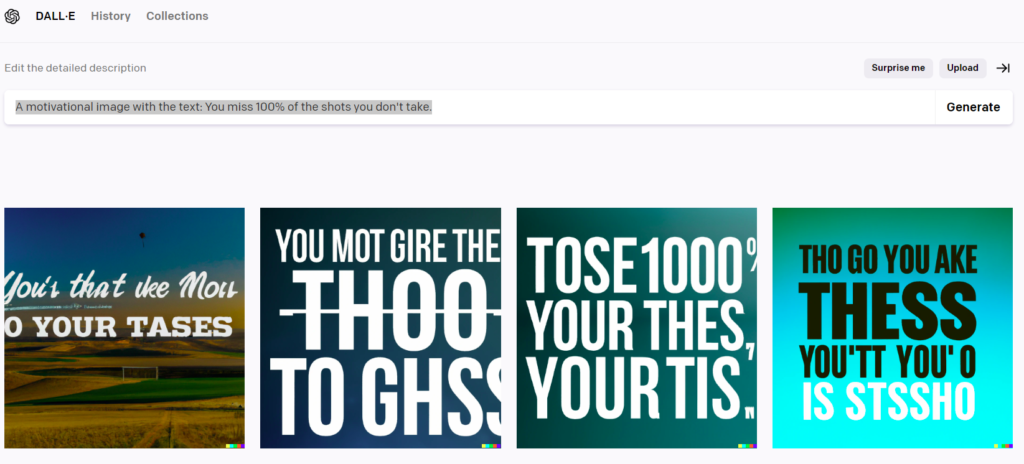

3.2 Handling In-Image Text Generation

A notable advancement in DALL-E 3 is its improved capability to handle text within images. Unlike its predecessor, DALL-E 3 can effortlessly generate in-image text, such as labels and signs. By seamlessly incorporating text into the images, DALL-E 3 creates a more coherent and immersive visual experience.

3.3 Examples of High Detail Rendering

OpenAI has shared several captivating examples that showcase the high level of detail and realism achieved by DALL-E 3. From illustrations of avocados expressing emotions to landscapes made entirely of various meats, the images generated by DALL-E 3 are both stunning and imaginative. These examples demonstrate the extraordinary precision and creative potential of this AI image generator.

3.4 Comparison to Competing Models

In comparison to other AI image-synthesis models, DALL-E 3 stands out as a leader in terms of detail rendering. While competing models may excel in rendering photorealistic details, they often require complex prompt manipulation to achieve desired results. DALL-E 3, on the other hand, produces remarkable images with minimal effort, making it a user-friendly choice for both experts and newcomers to the field.

4. Prompt Fidelity

DALL-E 3 not only pushes the limits of detail but also delivers exceptional prompt fidelity. Here’s what you need to know about this aspect of the model:

4.1 Text Handling within Images

One of the impressive features of DALL-E 3 is its ability to handle text within images. By accurately generating text in the desired context, DALL-E 3 enhances the realism and coherence of the generated images. This advancement opens up new possibilities for incorporating narrative elements into AI-generated visuals.

4.2 Built on ChatGPT

DALL-E 3 has been “built natively” on ChatGPT, enabling a closer integration between the AI assistant and the image generator. This integration allows for conversational refinements and prompts that take the ongoing conversation into account. The seamless collaboration between ChatGPT and DALL-E 3 promises to deliver a more intuitive and collaborative creative process.

4.3 Conversational Refinements and New Capabilities

By integrating DALL-E 3 with ChatGPT, OpenAI has introduced conversational refinements to AI image generation. Users can now discuss, revise, and refine image prompts in a conversational manner, allowing for better exploration of creative ideas.

This new capability of ChatGPT, powered by DALL-E 3, opens up exciting opportunities for users to interact with AI models in more natural and creative ways.

5. Conclusion

OpenAI’s DALL-E 3 represents a significant leap forward in AI image generation. With enhanced integration with ChatGPT, improved detail rendering, and exceptional prompt fidelity, this latest release sets new standards for the field. Whether you’re a professional artist, looking to use images for your business or simply an enthusiast, the power of DALL-E 3 is at your fingertips.

As AI technology continues to evolve, we can expect even more groundbreaking advancements that will push the boundaries of creativity and imagination. Get ready to explore the limitless possibilities of AI-generated images with DALL-E 3 and ChatGPT!

FAQ

Q: What is DALL-E 3?

A: DALL-E 3 is a version of OpenAI’s GPT-3 trained to generate images from textual descriptions.

Q: Who developed DALL-E 3?

A: DALL-E 3 was developed by OpenAI, a leading artificial intelligence research lab.

Q: What is the main purpose of DALL-E 3?

A: The main purpose of DALL-E 3 is to generate images from textual descriptions, creating a large set of unique, creative images from textual inputs.

Q: How does DALL-E 3 work?

A: DALL-E 3 works by using machine learning algorithms trained on a dataset of text-image pairs. It learns to understand the contents of an image and the corresponding text, and to generate an image that corresponds to any given text input.

Q: What are some potential applications of DALL-E 3?

A: Potential applications of DALL-E 3 include art and design, autonomous creation of content for games or virtual realities, and a new way of interfacing with AI.

Q: What model is DALL-E 3 based on?

A: DALL-E 3 is based on the GPT-3 model, a powerful language processing AI model, also developed by OpenAI.

Q: What type of AI is DALL-E 3 based on?

A: DALL-E 3 is based on generative adversarial networks (GANs), a class of AI algorithms used in unsupervised machine learning.

Q: Has DALL-E 3 been used in any commercial applications so far?

A: As of now, DALL-E 3 is primarily experimental and has not been widely deployed in commercial applications.

Q: Can DALL-E 3 generate any kind of image?

A: While the range and variety of images DALL-E 3 can generate is vast, it is not unlimited. It works best with text prompts that are within its training data and might struggle with unfamiliar or highly specific prompts.

Q: How is DALL-E 3 related to the original DALL-E?

A: DALL-E 3 is an iteration of the original DALL-E, enhancing and expanding on the original model’s capabilities. The underlying technology is based on the same principles, but DALL-E 3 leverages improvements and advancements made since the launch of the original DALL-E.